Inspired by data, optimized for AIoptimized for AIoptimized for AI

applicants = df.filter(

(fc.col("yoe") > 5)

& fc.semantic.predicate(

"Has MCP Protocol experience? Resume: {{resume}}",

resume=col("resume"),

)

)

prompt = """

Is this candidate a good fit for the job?

Candidate Background: {{left_on}}

Job Requirements: {{right_on}}

Use the following criteria to make your decision:

...

"""

joined = (

applicants.semantic.join(

other=jobs,

predicate=prompt,

left_on=col("resume"),

right_on=col("job_description"),

)

.order_by("application_date")

.limit(5)

)

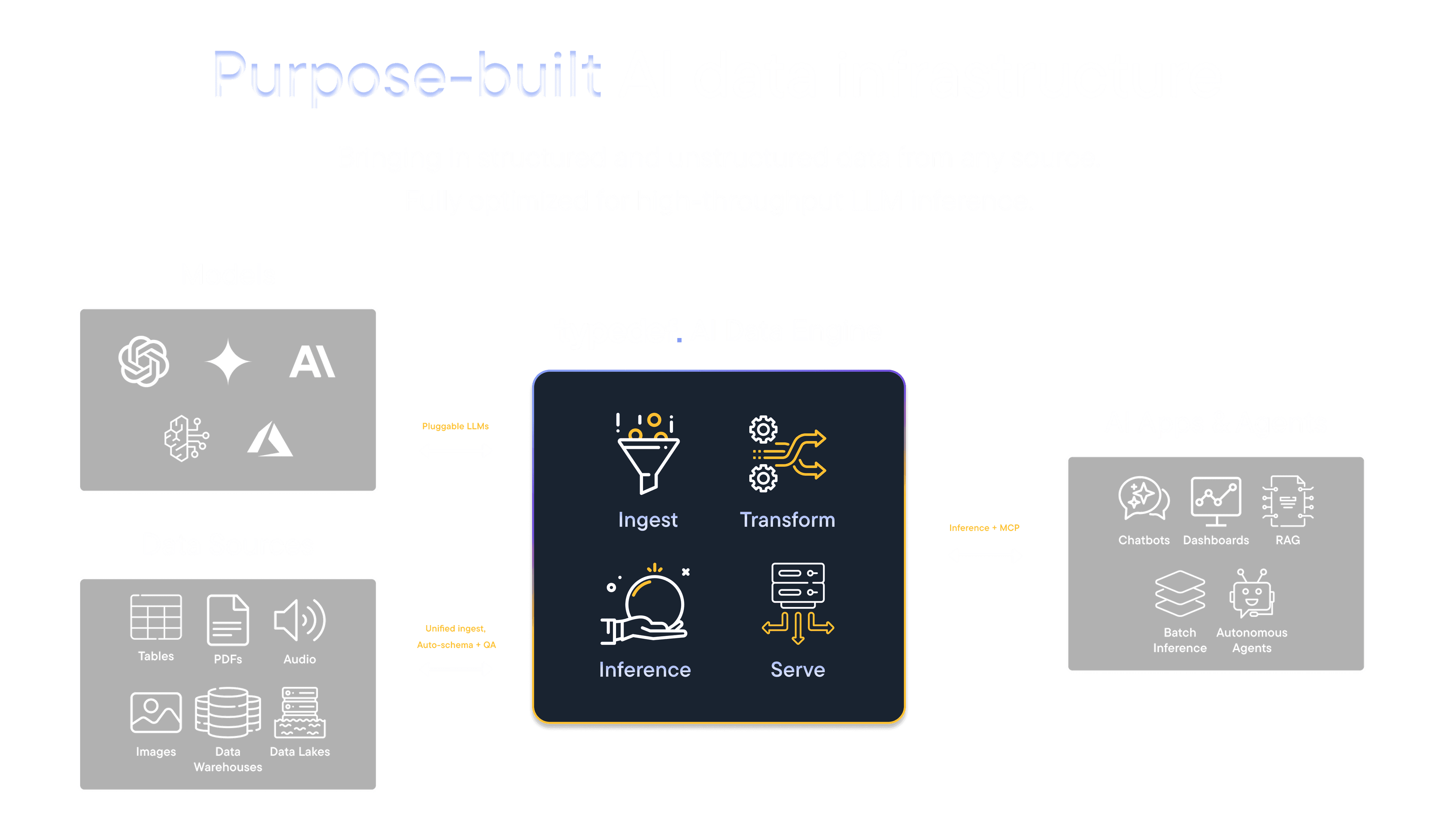

Semantic Operations as DataFrame Primitives

Transform unstructured and structured data using familiar DataFrame operations. If you know PySpark or Pandas, you know typedef. Semantic operations like classification work just like filter, map and aggregate.

df = (

df

.with_column("raw_blog", fc.col("blog").cast(fc.MarkdownType))

.with_column(

"chunks",

fc.markdown.extract_header_chunks("raw_blog", ...)

)

.with_column("title", fc.json.jq("raw_blog", ...))

.explode("chunks")

.with_column(

"embeddings",

fc.semantic.embed(fc.col("chunks").content))

)

Rust-Powered Multimodal Engine

The only engine that goes beyond standard multimodal types. Native support for markdown, transcripts, embeddings, HTML, JSON and others, with specialized operations. Rust performance meets Python simplicity to process any data at any scale.

class Ticket(BaseModel):

customer_tier: Literal["free", "pro", "enterprise"]

region: Literal["us", "eu", "apac"]

issues: List[Issue]

tickets = (df

.with_column("extracted", fc.semantic.extract("raw_ticket", Ticket))

.unnest("extracted")

.filter(fc.col("region") == "apac")

.explode("issues")

)

bugs = tickets.filter(fc.col("issues").category == "bug")

Schema driven Extraction

Type-safe structured extraction from unstructured text. Define schemas once, get validated results every time. Eliminates prompt engineering brittleness and manual validation.

config = fc.SessionConfig(

app_name="my_app",

semantic=fc.SemanticConfig(

language_models={

"nano": fc.OpenAILanguageModel(

"gpt-4.1-nano", rpm=500, tpm=200_000

),

"flash": fc.GoogleVertexLanguageModel(

"gemini-2.0-flash-lite", ...

),

},

default_language_model="flash",

),

cloud=fc.CloudConfig(...),

)

session = fc.Session.get_or_create(config)

Develop Locally, Scale on Cloud

Develop locally with fenic, deploy to typedef cloud instantly. Zero code changes from prototype to production. Same code, automatic scaling.